PoliLoom

Hey there OpenSanctions community! I’m Johan. Friedrich asked me to have a go at some data extraction, and share the development process here, so let’s dive into it together.

We’re about to find out how great LLMs are at extracting metadata on politicians from Wikipedia and other sources. Our goal is to enrich Wikidata. This is a research project, the goal is to have a usable proof of concept.

Below is something that resembles a plan. I did my best structuring my thoughts on how I think we should approach this, based on a day or so of research. I’m also left with some questions, that i left sprinkled throughout this document.

If you have some insight or can tell me that i’m on the wrong track, i would be grateful!

Let’s get into it.

Database

We want to have users decide if our changes are valid or not, so for that we’ll have to store them first. But to know what has changed, we need to know what properties are currently in Wikidata.

We’ll be using SQLAlchemy and Alembic. In development we’ll use SQLite. In production PostgreSQL.

Schema

We’d be reproducing a small part of the Wikidata politician data model, to keep things as simple as possible, we’d have Politician, Position, Property and a many-to-many HoldsPosition relationship entity.

Politicianwould be politicians with names and a country.Sourceweb source that contains information on politicianPropertywould be things like date-of-birth and birthplace for politicians.Positionis all the positions from Wikidata with their country.HoldsPositioncontains a link to a politician and a position, together with start & end dates.

Source would have a many-to-many relation to Politician, Property and Position

Property and HoldsPosition would also hold information on whether they are new extracted properties, and if/what user has confirmed their correctness and updated Wikidata. Property would have a type. Where type would be either BirthDate or BirthPlace.

Populating our database

We can get a list of all politicians in Wikidata by querying. Either by occupation:

SELECT DISTINCT ?politician ?politicianLabel WHERE {

?politician wdt:P31 wd:Q5 . # must be human

?politician wdt:P106/wdt:P279* wd:Q82955 . # occupation is politician or subclass

SERVICE wikibase:label { bd:serviceParam wikibase:language "[AUTO_LANGUAGE],en". }

}

LIMIT 100

Or by position held:

SELECT DISTINCT ?politician ?politicianLabel WHERE {

?politician wdt:P31 wd:Q5 . # must be human

?politician wdt:P39 ?position . # holds a position

?position wdt:P31/wdt:P279* wd:Q4164871 . # position is political

SERVICE wikibase:label { bd:serviceParam wikibase:language "[AUTO_LANGUAGE],en". }

}

LIMIT 100

We could also fetch both and merge the sets. However, these queries are slow, and have to be paginated.

Wikipedia pages should have a Wikidata item linked, Wikidata records of politicians should almost always have a Wikipedia link. We should be able to connect these entities.

Then there probably is politician Wikidata records that don’t have a linked Wikipedia page, and there’s probably Wikipedia pages that don’t have a Wikidata entry. We could try to process Wikipedia politician articles that have no linked entity like the random web pages we’ll query.

When we populate our local database, we want to have Politician entities, together with their Position and Properties. When populating the database, try to get the Wikipedia link of the Politician in English and their local language.

Questions

- Do we filter out deceased people?

- If we’d be importing from the Wikipedia politician category, do we filter out all deceased people with a rule/keyword based filter?

Extraction of new properties

Once we have our local list of politicians, we can start extraction of properties. We basically have 2 types of sources. Wikipedia articles that are linked to our Wikidata entries, and random web sources that are not linked to our Wikidata entries.

Then there’s 2 types of information that we want to extract. Specific properties like date of birth, birthplace etc. And political positions. Extracting properties should be relatively simple. For positions, we’d have to know what positions we’d like to extract.

For extraction we’d feed the page to OpenAI and use the structured data API to get back data that fits our model.

We can query all political positions in Wikidata. At the time of writing that returns 37631 rows:

SELECT DISTINCT ?position ?positionLabel WHERE {

?position wdt:P31/wdt:P279* wd:Q294414 . # elected or appointed political position

SERVICE wikibase:label { bd:serviceParam wikibase:language "[AUTO_LANGUAGE],en". }

}

It would be nice if we can tell our LLM what positions we’d like to extract, instead of having it do whatever and then having to match it to any of the positions we know. However, 37631 rows is a lot of tokens. As we know the country of the politician before extracting the positions, we can select the subset of positions for that country to pass to our LLM so we can be sure to extract correct references.

Questions

- How do we handle incomplete birth dates (1962 / jun 1982)

- How do we manage names of people in different scripts?

Wikipedia

Scraping wikipedia would be relatively simple. During our wikidata import we’ve stored Wikipedia links. We’d have to fetch those for our Politician and extract properties / positions from the data. We’d try to get the page in English and in the local language of the politician.

Scraping random webpages

Then there is the random pages. For these we’d have to link any information we extract to a Wikipedia article / Wikidata entity ourselves.

I think we should handle 2 general page types here, index pages and detail pages.

- For index pages, we can have the LLM output a xpath/CSS selector for all detail page links, and possibly for pagination.

- For detail pages we try to extract what we can, then try to find the relating entity in our database. We can do a similarity search for entities with the same properties and use a score treshhold to decide wether we should try to update a found entity.

My mind is going wild on building some sort of scraper building robot, that generates xpath selectors with a LLM, then scrapes pages with those selectors. Re-running the xpath generation when the scraper breaks. But i think that would only make sense if we want to run our scraper often. The most simple thing would be to feed everything into the model.

Questions

- Do we archive web sources?

- How often do we want to run our tool?

- How do we handle multilingual variations in names

- How will you handle conflicting information between sources? (e.g., Wikipedia says one birth date, government site says another)

API

We’ll create a FastAPI API that can be queried for entities with properties and positions. There should also be a route for users to mark a politician or position as correct.

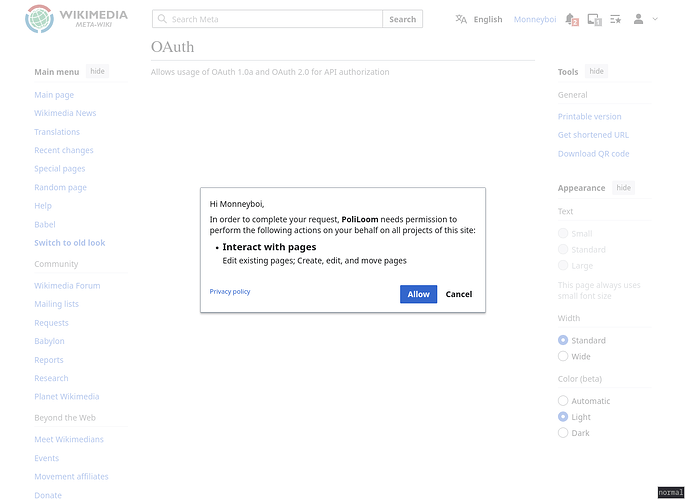

Authentication could probably be handled by the MediaWiki oAuth system.

Routes

/politicians/unconfirmed- lists politicians with unconfirmedPropertyorHoldsPosition/politicians/{politician_id}/confirm- Allows the user to confirm the properties/positions of a politician. Should allow for the user to “throw out” properties / positions in the POST data.

CLI commands

The API project would also house the CLI functionality for importing and enriching data.

- Import single ID from Wikidata

- Enrich single ID with data from Wikipedia

Confirmation GUI

We’d like users to be able to confirm our generated data. To do this we’ll build a NextJS GUI where users will be provided with statements and their accompanying source data / URL.

When users confirm the correctness of our generated properties, we’d like to update Wikidata on their behalf through the Wikidata API.

Questions

- What choices do we want to have our users make? Do we want to present single properties with a source link where we found the information? Or do we want to show a complete entity with all new information and (possibly multiple) source links?

- What source data do we want to show? Do we want to show links, or archived pages?

Project planning

Wikipedia articles are linked to Wikidata entities, saving us from having to find those links ourselves. That’s why i propose starting with extraction from these.

- Start with populating a local database with politicians, this includes scraping Wikipedia and related Wikidata entries

- Extraction of properties from Wikipedia articles

- Confirmation GUI

- Random web sources extraction

I think getting to point 3 and having a system that works is the most important thing. Point 4 is probably not easy and will require more budget then we have. But regardless, we’ll see how far we can get with that.

Interesting

- The Wikidata data model for politicians: Wikidata:WikiProject every politician/Political data model - Wikidata

- List of National Legislatures: https://www.Wikidata.org/wiki/Wikidata:WikiProject_every_politician/List_of_National_Legislatures

- The Wikipedia Wikidata link: Wikipedia:Finding a Wikidata ID - Wikipedia

- The Wikidata REST API: Wikidata:REST API - Wikidata

- MediaWiki oAuth: OAuth/For Developers - MediaWiki

You can find the development repository here: GitHub - opensanctions/poliloom: Help build the world's largest open database of politicians.

Please let me know if anything does not make sense, or if there’s something else that makes more sense. Also, I’d love to hear your opinion on any of the questions. I’m curious to hear what you think.